Fascinating Deepseek Ai Tactics That Can assist Your corporation Devel…

페이지 정보

작성자 Jeffery 작성일25-02-22 07:05 조회4회 댓글0건본문

주소 :

희망 시공일 :

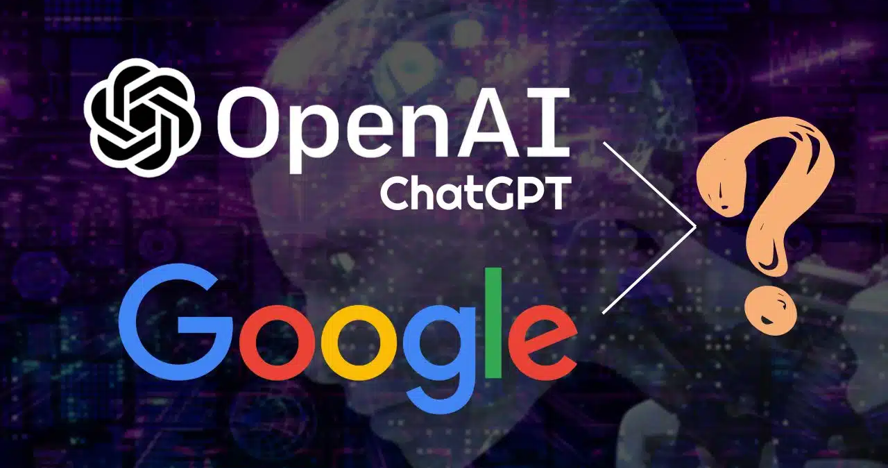

And it seems that for a neural community of a given size in complete parameters, with a given quantity of computing, you need fewer and fewer parameters to attain the identical or better accuracy on a given AI benchmark check, reminiscent of math or question answering. Graphs present that for a given neural web, on a given quantity of computing price range, there's an optimum amount of the neural net that can be turned off to reach a degree of accuracy. AI researchers at Apple, in a report out final week, clarify properly how DeepSeek and similar approaches use sparsity to get higher results for a given quantity of computing energy. In the rapidly evolving field of synthetic intelligence (AI), a new player has emerged, shaking up the business and unsettling the stability of energy in international tech. Google CEO Sundar Pichai joined the chorus of reward, acknowledging DeepSeek's "very, excellent work" and suggesting that lowering AI costs advantages both Google and the broader AI business. Approaches from startups primarily based on sparsity have additionally notched excessive scores on business benchmarks in recent times.

And it seems that for a neural community of a given size in complete parameters, with a given quantity of computing, you need fewer and fewer parameters to attain the identical or better accuracy on a given AI benchmark check, reminiscent of math or question answering. Graphs present that for a given neural web, on a given quantity of computing price range, there's an optimum amount of the neural net that can be turned off to reach a degree of accuracy. AI researchers at Apple, in a report out final week, clarify properly how DeepSeek and similar approaches use sparsity to get higher results for a given quantity of computing energy. In the rapidly evolving field of synthetic intelligence (AI), a new player has emerged, shaking up the business and unsettling the stability of energy in international tech. Google CEO Sundar Pichai joined the chorus of reward, acknowledging DeepSeek's "very, excellent work" and suggesting that lowering AI costs advantages both Google and the broader AI business. Approaches from startups primarily based on sparsity have additionally notched excessive scores on business benchmarks in recent times.

DeepSeek is a Chinese synthetic intelligence (AI) firm based in Hangzhou that emerged a couple of years ago from a college startup. The mannequin known as DeepSeek V3, which was developed in China by the AI firm DeepSeek. The Journal additionally tested Free DeepSeek r1’s R1 mannequin itself. DeepSeek’s research paper suggests that either essentially the most superior chips are usually not wanted to create high-performing AI models or that Chinese corporations can still supply chips in adequate portions - or a mixture of each. ChatGPT is a term most individuals are conversant in. I admit that expertise has some amazing skills; it might permit some people to have their sight restored. Sparsity is a type of magic dial that finds the perfect match of the AI model you've obtained and the compute you've got accessible. DeepSeek, a Chinese AI company, just lately launched a brand new Large Language Model (LLM) which seems to be equivalently succesful to OpenAI’s ChatGPT "o1" reasoning model - essentially the most refined it has accessible. The ability to use solely some of the overall parameters of a large language model and shut off the remaining is an example of sparsity. "If you’re in the channel and you’re not doing giant language fashions, you’re not touching machine learning or information units.

DeepSeek is a Chinese synthetic intelligence (AI) firm based in Hangzhou that emerged a couple of years ago from a college startup. The mannequin known as DeepSeek V3, which was developed in China by the AI firm DeepSeek. The Journal additionally tested Free DeepSeek r1’s R1 mannequin itself. DeepSeek’s research paper suggests that either essentially the most superior chips are usually not wanted to create high-performing AI models or that Chinese corporations can still supply chips in adequate portions - or a mixture of each. ChatGPT is a term most individuals are conversant in. I admit that expertise has some amazing skills; it might permit some people to have their sight restored. Sparsity is a type of magic dial that finds the perfect match of the AI model you've obtained and the compute you've got accessible. DeepSeek, a Chinese AI company, just lately launched a brand new Large Language Model (LLM) which seems to be equivalently succesful to OpenAI’s ChatGPT "o1" reasoning model - essentially the most refined it has accessible. The ability to use solely some of the overall parameters of a large language model and shut off the remaining is an example of sparsity. "If you’re in the channel and you’re not doing giant language fashions, you’re not touching machine learning or information units.

Within the paper, titled "Parameters vs FLOPs: Scaling Laws for Optimal Sparsity for Mixture-of-Experts Language Models," posted on the arXiv pre-print server, lead writer Samir Abnar of Apple and different Apple researchers, together with collaborator Harshay Shah of MIT, studied how efficiency diversified as they exploited sparsity by turning off components of the neural internet. That’s DeepSeek, a revolutionary AI search tool designed for college students, researchers, and companies. The platform now consists of improved knowledge encryption and anonymization capabilities, offering companies and customers with elevated assurance when utilizing the tool whereas safeguarding delicate information. Microsoft have a stake in Chat GPT owner OpenAI which they paid $10bn for, while Google’s AI tool is Gemini. While it is unattainable to foretell the trajectory of AI improvement, history and experience have shown themselves to be a better information than a priori rationalization time and time again. Put another means, whatever your computing energy, you possibly can more and more flip off components of the neural web and get the identical or better outcomes. As you turn up your computing power, the accuracy of the AI model improves, Abnar and group found. More parameters, more computing effort, typically.

The magic dial of sparsity does not solely shave computing costs, as within the case of DeepSeek -- it really works in the other course too: it also can make bigger and greater AI computers more efficient. Apple has no connection to DeepSeek, however Apple does its personal AI research regularly, and so the developments of outdoors corporations reminiscent of DeepSeek are a part of Apple's continued involvement in the AI research field, broadly talking. I'm not saying that technology is God; I'm saying that companies designing this expertise are likely to suppose they're god-like of their talents. Let me be clear on what I'm saying here. We're residing in a day where we have one other Trojan horse in our midst. Transitioning from Greek mythology to fashionable-day technology, we could have one other Trojan horse, and it could also be embraced and welcomed into our properties and lives simply as that ancient wood horse as soon as was. The Greeks persuaded the Trojans that the horse was an providing to Athena (the goddess of conflict), and believing the horse would protect town of Troy, the Trojans introduced the horse inside town walls as they were unaware the picket horse was full of Greek warriors.

If you treasured this article and you simply would like to receive more info pertaining to Deepseek Online chat online nicely visit our web page.

댓글목록

등록된 댓글이 없습니다.